Google T5 Translation as a Service with Just 7 lines of Codes¶

What is T5? Text-To-Text Transfer Transformer (T5) from Google gives the power of translation.

In the article, we will deploy Google T5 model as a REST API service. Difficult? What about I’ll tell you: you just need to write 7 lines of codes?

Install Dependencies¶

HuggingFace¶

pip install "transformers[torch]"

If it doesn’t work, please visit Installation and check their official documentations.

Pinferencia¶

pip install "pinferencia[streamlit]"

Define the Service¶

First let’s create the app.py to define the service:

| app.py | |

|---|---|

1 2 3 4 5 6 7 8 9 10 11 12 13 | |

Start the Service¶

$ uvicorn app:service --reload

INFO: Uvicorn running on http://127.0.0.1:8000 (Press CTRL+C to quit)

INFO: Started reloader process [xxxxx] using statreload

INFO: Started server process [xxxxx]

INFO: Waiting for application startup.

INFO: Application startup complete.

$ pinfer app:service --reload

Pinferencia: Frontend component streamlit is starting...

Pinferencia: Backend component uvicorn is starting...

Test the Service¶

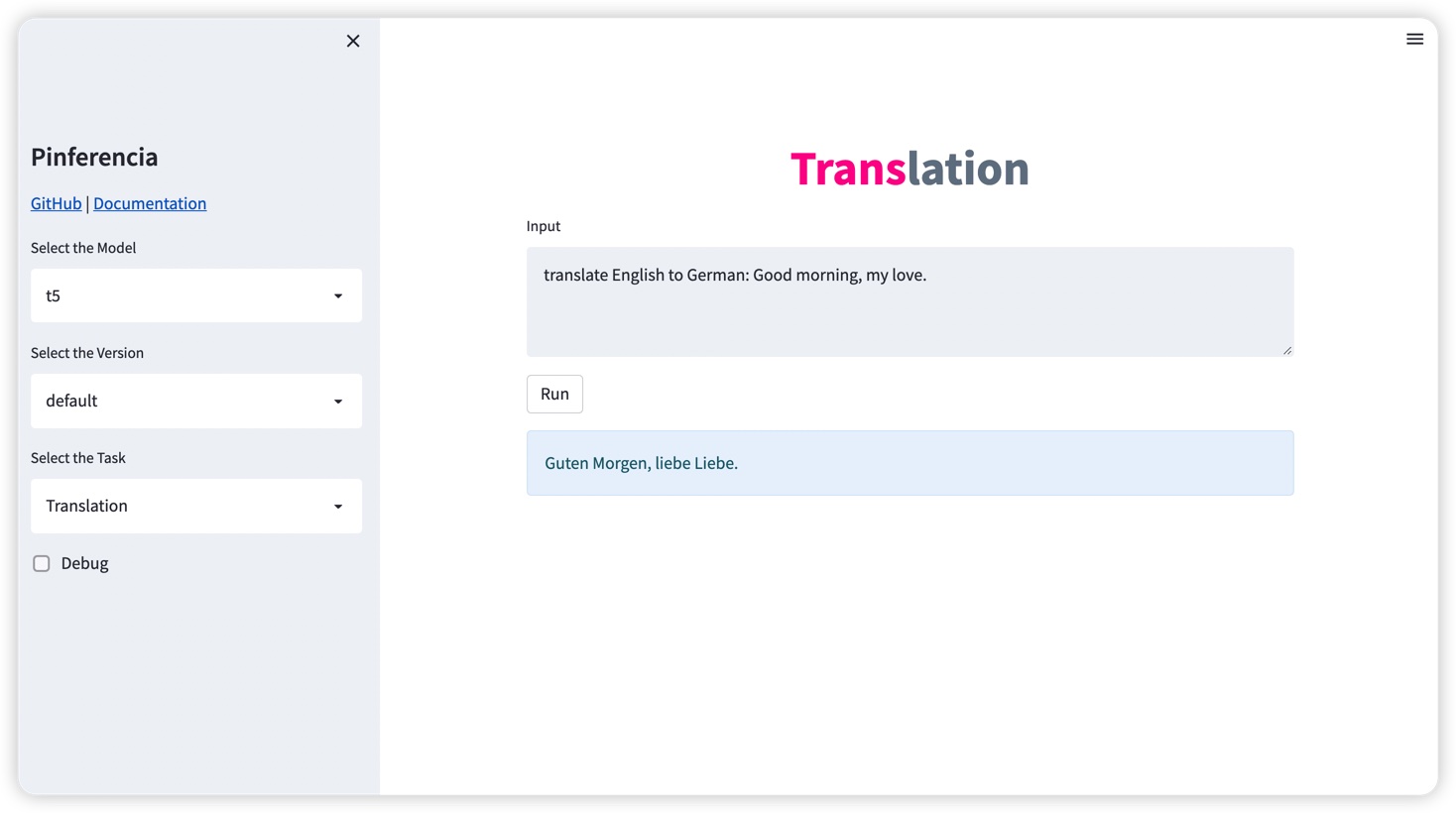

Open http://127.0.0.1:8501, and the template Translation will be selected automatically.

curl -X 'POST' \

'http://localhost:8000/v1/models/t5/predict' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-d '{

"parameters": {},

"data": ["translate English to German: Good morning, my love."]

}'

Result:

{

"model_name": "t5",

"data": ["translation_text": "Guten Morgen, liebe Liebe."]

}

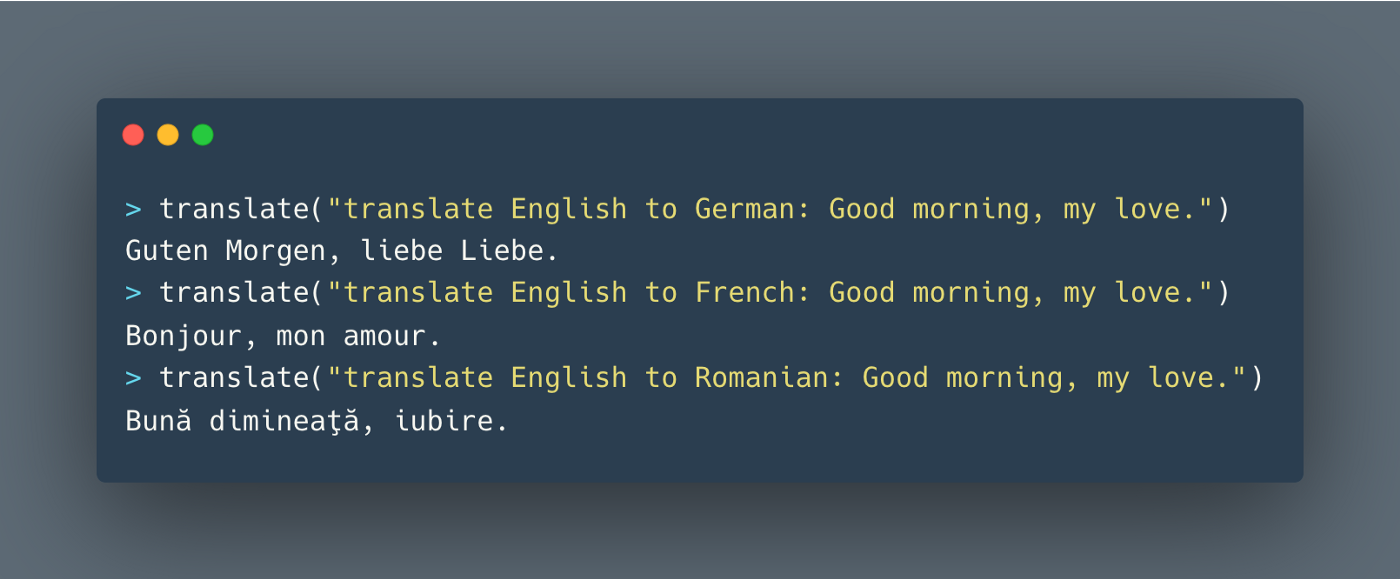

| test.py | |

|---|---|

1 2 3 4 5 6 7 8 9 | |

Run python test.py and print the result:

Prediction: ["Guten Morgen, liebe Liebe."]

Even cooler, go to http://127.0.0.1:8000, and you will have a full documentation of your APIs.

You can also send predict requests just there!