Image Classification

In this tutorial, we will explore how to use Hugging Face pipeline, and how to deploy it with Pinferencia as REST API.

Prerequisite¶

Please visit Dependencies

Download the model and predict¶

The model will be automatically downloaded.

1 2 3 4 5 6 | |

Result:

[{'label': 'lynx, catamount', 'score': 0.4403027892112732},

{'label': 'cougar, puma, catamount, mountain lion, painter, panther, Felis concolor',

'score': 0.03433405980467796},

{'label': 'snow leopard, ounce, Panthera uncia',

'score': 0.032148055732250214},

{'label': 'Egyptian cat', 'score': 0.02353910356760025},

{'label': 'tiger cat', 'score': 0.023034192621707916}]

Amazingly easy! Now let's try:

Deploy the model¶

Without deployment, how could a machine learning tutorial be complete?

First, let's install Pinferencia.

pip install "pinferencia[streamlit]"

| app.py | |

|---|---|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | |

Easy, right?

Predict¶

curl --location --request POST 'http://127.0.0.1:8000/v1/models/vision/predict' \

--header 'Content-Type: application/json' \

--data-raw '{

"data": "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/pipeline-cat-chonk.jpeg"

}'

Result:

Prediction: [

{'score': 0.433499813079834, 'label': 'lynx, catamount'},

{'score': 0.03479616343975067, 'label': 'cougar, puma, catamount, mountain lion, painter, panther, Felis concolor'},

{'score': 0.032401904463768005, 'label': 'snow leopard, ounce, Panthera uncia'},

{'score': 0.023944756016135216, 'label': 'Egyptian cat'},

{'score': 0.022889181971549988, 'label': 'tiger cat'}

]

| test.py | |

|---|---|

1 2 3 4 5 6 7 8 9 | |

Run python test.py and result:

Prediction: [

{'score': 0.433499813079834, 'label': 'lynx, catamount'},

{'score': 0.03479616343975067, 'label': 'cougar, puma, catamount, mountain lion, painter, panther, Felis concolor'},

{'score': 0.032401904463768005, 'label': 'snow leopard, ounce, Panthera uncia'},

{'score': 0.023944756016135216, 'label': 'Egyptian cat'},

{'score': 0.022889181971549988, 'label': 'tiger cat'}

]

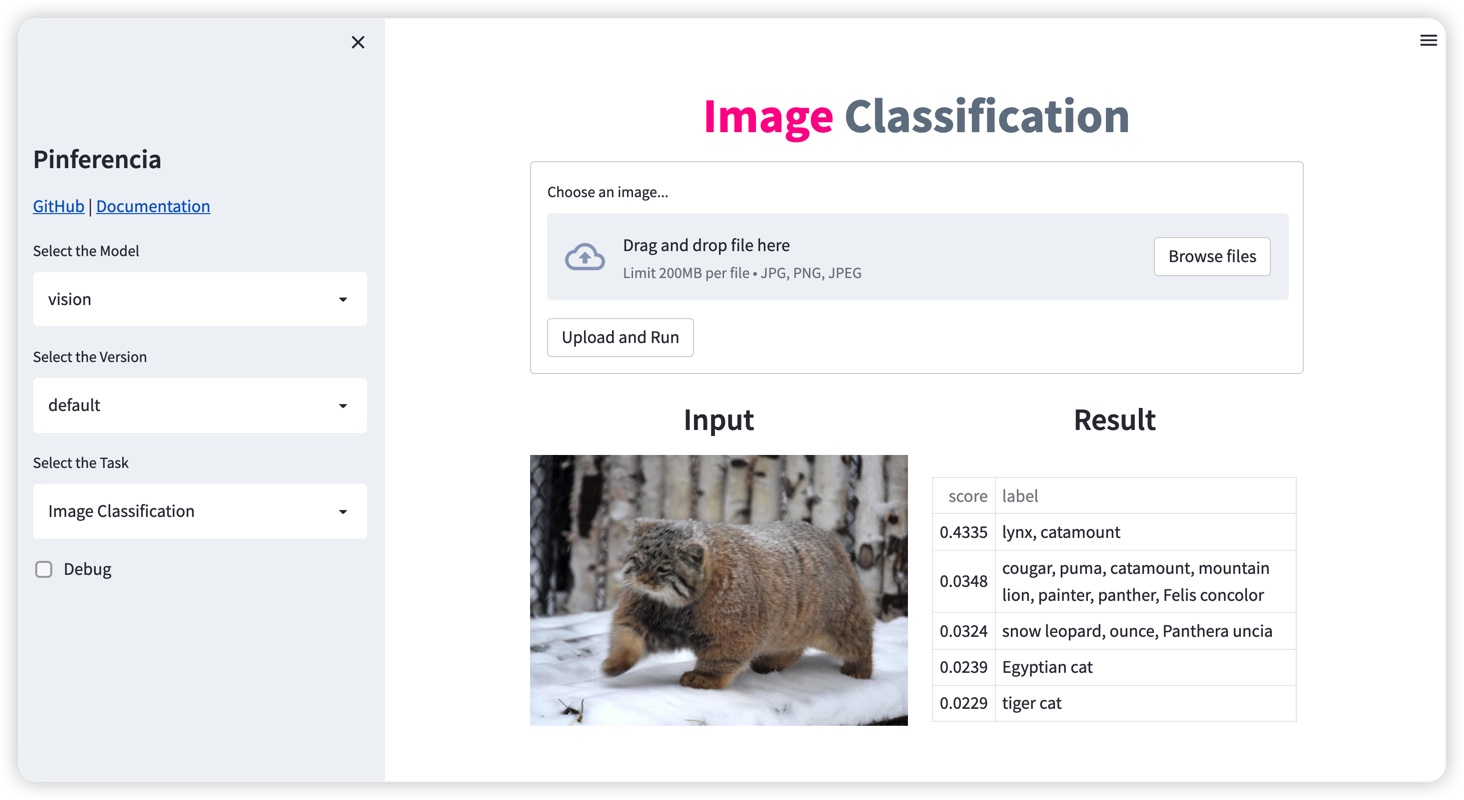

Even cooler, go to http://127.0.0.1:8501, and you will have an interactive ui.

You can send predict request just there!

Improve it¶

However, using the url of the image to predict sometimes is not always convenient.

Let's modify the app.py a little bit to accept Base64 Encoded String as the input.

| app.py | |

|---|---|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 | |

Predict Again¶

Open http://127.0.0.1:8501, and the template Image Classification will be selected automatically.

curl --location --request POST 'http://127.0.0.1:8000/v1/models/vision/predict' \

--header 'Content-Type: application/json' \

--data-raw '{

"data": "..."

}'

Result:

Prediction: [

{'score': 0.433499813079834, 'label': 'lynx, catamount'},

{'score': 0.03479616343975067, 'label': 'cougar, puma, catamount, mountain lion, painter, panther, Felis concolor'},

{'score': 0.032401904463768005, 'label': 'snow leopard, ounce, Panthera uncia'},

{'score': 0.023944756016135216, 'label': 'Egyptian cat'},

{'score': 0.022889181971549988, 'label': 'tiger cat'}

]

| test.py | |

|---|---|

1 2 3 4 5 6 7 8 9 | |

Run python test.py and result:

Prediction: [

{'score': 0.433499813079834, 'label': 'lynx, catamount'},

{'score': 0.03479616343975067, 'label': 'cougar, puma, catamount, mountain lion, painter, panther, Felis concolor'},

{'score': 0.032401904463768005, 'label': 'snow leopard, ounce, Panthera uncia'},

{'score': 0.023944756016135216, 'label': 'Egyptian cat'},

{'score': 0.022889181971549988, 'label': 'tiger cat'}

]